What is Jacobian Matrix?

Hey folks, back with another intriguing concept that’s pretty crucial in engineering circles. You might be wondering, “Why are you here…

Hey folks, back with another intriguing concept that’s pretty crucial in engineering circles. You might be wondering, “Why are you here, Chanaka? There’s already a ton of info out there on this topic!” Well, true, but hear me out. I noticed there’s a shortage of beginner-friendly resources on the Jacobian Matrix, so I figured I’d break it down in simple terms based on what I’ve grasped.

So, what’s the deal with the Jacobian Matrix? Let’s dive in.

Before you touch the concept of the Jacobian matrix, you need to know about derivatives and gradients.

Important Key Words

- Scalar valued function — a function that gives a single value as output

Ex — f(x) = 3x+2, it gives 8 when x = 1

Notations

- x̄ or x (Bold x,not x) denotes — x is a vector

- f bar or f (bold f, not f ) denotes — f is a vector

Derivatives

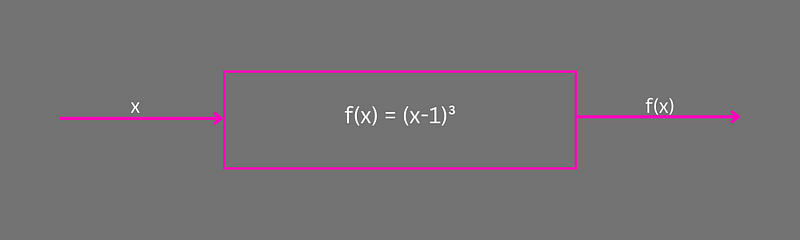

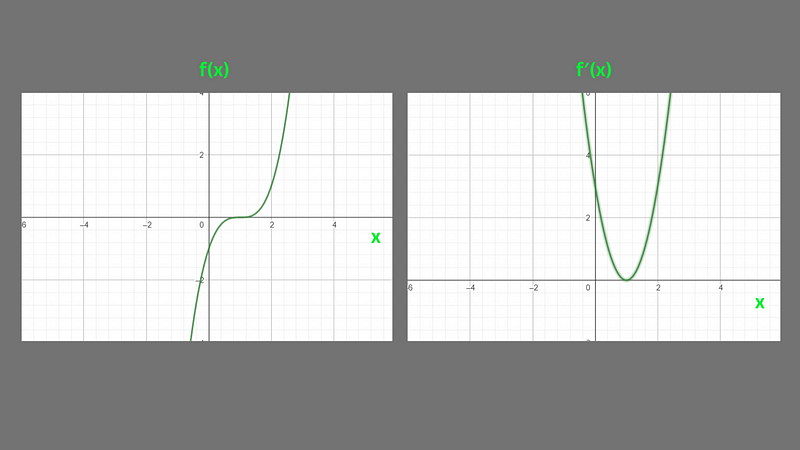

f(x) = (x-1)³

f′(x) = 3 (x-1)²

Here we are dealing with scaler-valued functions with one input parameter. Derivative is nothing but the sensitivity of the function f(x) to change in x direction. Simply we can say that the rate of change of f(x) at the given x.

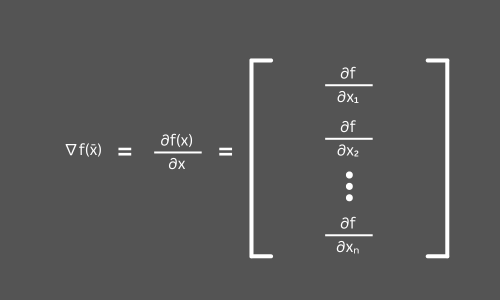

Gradient

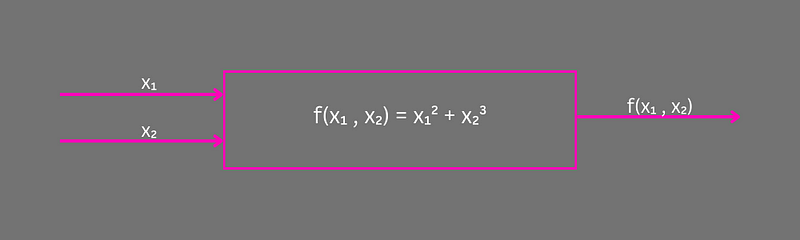

Here also we are dealing with scaler-valued functions but having multiple input parameters.

∇f(x̄) — Here x̄ means that we have a vector of inputs

Let’s take x̄ = [ x₁ x₂ … xₙ]ᵀ. x is a vector of n variables and it is a column vector.

Gradient = ∇f = ∇f(x̄)

It is a n * 1 vector ( But it depends on the way you define x̄ ).

Ex ÷ f(x₁ , x₂) = x₁² + x₂³

∇f(x̄) = [ 2x₁ 3x₂² ]ᵀ

Here what do the 6x₁ and 3x₂² mean?

6x₁ — Sensitivity of function f to change in x₁ direction

3x₂² — Sensitivity of function f to change in x₂ direction

Jacobian Matrix

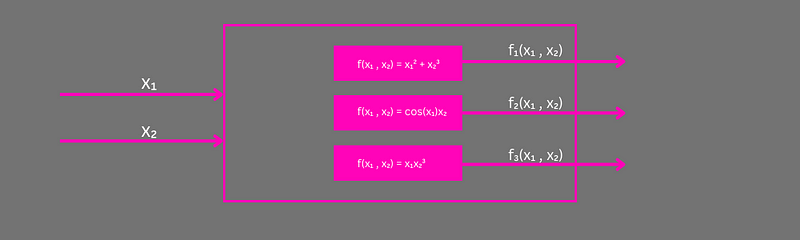

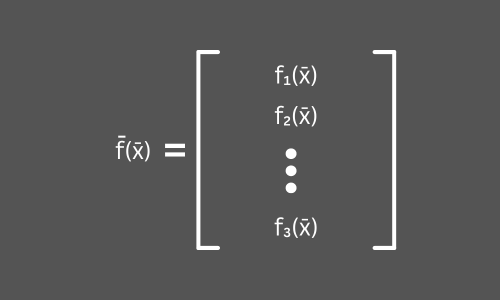

Here we have 3 scaler-valued function outputs that are stacked on top of one another. So we are dealing with vector outputs. Not with a single value. We calculate the Jacobian matrix for m number of scalar-valued functions that are stacked on top of one another. f(x̄) has output as a vector of scaler-valued functions.

f(x̄) — Here x̄ means that we have a vector of inputs. Bold f means that we have a vector of outputs (Bold f is another alternative notation for f bar).

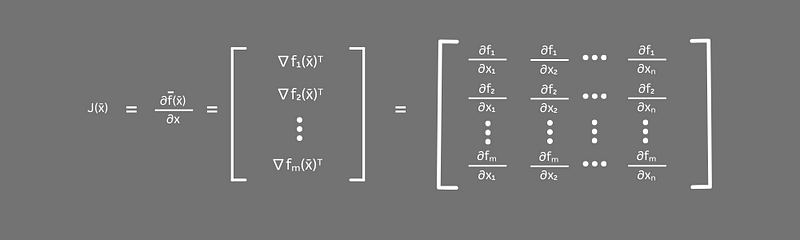

So the Jacobian matrix J(x̄) is nothing but the partial derivatives of each vector-valued multivariable function (Gradients of each vector-valued multivariable function).

As you know f(x̄) has the output as a column vector (See 2.1). So we can write the Jacobian matrix as below.

Finally, we have the m * n matrix by expanding each gradient’s transformation.

Here ∂f₁ / ∂x₁ indicates that How does the function f₁ sensitive to change in x₁ direction.

So we define Jᵢⱼ = J(x̄)ᵢⱼ as the sensitivity of function fᵢ to change in xⱼ direction ( i = row number, j = column number).

I hope you have understood the concept.

If you found this useful, follow me for future articles. It motivates me to write more for you.

Follow me on Medium

Follow me on LinkedIn