Streamlining Your Machine Learning Workflow with Scikit-Learn Pipelines — Pipeline Explained

When you’re diving into machine learning, there’s a crucial step you must take before training your model — splitting your data into a…

When you’re diving into machine learning, there’s a crucial step you must take before training your model — splitting your data into a training set and a test set. Both datasets undergo data cleaning and preprocessing before you feed them into your machine-learning model. But let’s face it, writing repetitive code for these steps is far from efficient. This is where the Scikit-Learn pipeline shines.

What is a Scikit-Learn Pipeline?

Think of the Scikit-Learn pipeline as an elegant and efficient way to streamline your machine-learning workflow. Instead of manually coding each preprocessing and transformation step for your training and test datasets, the pipeline allows you to link these steps together seamlessly.

Imagine you have a single pipeline where you can input any data. This data will be automatically transformed into the appropriate format for model training or prediction. The Scikit-Learn pipeline does just that, making your code cleaner, more readable, and easier to adjust.

Breaking Down the Pipeline Components

In a typical machine learning task, you often perform a sequence of transformations on your raw dataset before applying a final estimator. Here’s how each component fits into the pipeline.

Transformers

These are steps that transform your data. Each transformer must implementfitandtransformmethods, or a combinedfit_transformmethod. Examples include scaling, normalization, and feature extraction.

Didn’t get it?

Don’t worry. I will explain it.

In sci-kit-learn, transformers are a special type of class designed to preprocess and prepare your data for machine learning models. They play a crucial role in the machine learning pipeline, which is a sequence of steps that transforms raw data into a format suitable for training and using a model.

Key Points about Transformers

- Function

Unlike estimators and predictors (which often focus on model training and prediction), transformers focus solely on data transformation. They manipulate the features (columns) in your data to make them more suitable for modeling.

2. Tasks Performed

Transformers can perform various data preprocessing tasks, including,

- Scaling - Standardizing or normalizing features to have a similar range. (Read more)

- Encoding — Converting categorical data into numerical representations. (Read more)

- Feature Selection — Choosing a subset of relevant features. (Read more)

- Imputation — Filling missing values in the data. (Read more)

- Custom Transformations — You can create custom transformers for specific data manipulation needs. (Read more)

3. Methods

Transformers typically implement two core methods:

fit(X)— This method analyzes the training data (X) to learn the parameters or statistics needed for the transformation. For example, a scaler might calculate the mean and standard deviation during fitting.transform(X) —This method applies the learned transformation to new data (X). Using the learned parameters fromfit, the transformer modifies the features in the data.fit_transform(X)— This is a convenience method that combinesfitandtransforminto one step. It is commonly used during data preprocessing to fit the transformer to the training data and then transform it

Example

Imagine you have a dataset with features like age (numerical) and city (categorical). A pipeline might include the following transformers:

- StandardScaler - This transformer would learn the mean and standard deviation of the age column during

fitand then subtract the mean and divide by the standard deviation duringtransform. (Read More) - OneHotEncoder — This transformer would convert the city column into one-hot encoded features during

fit(e.g., creating separate features for each unique city). Duringtransform, it would apply the one-hot encoding to new data. (Read More)

Transformers are essential building blocks in sci-kit-learn pipelines. By effectively preprocessing your data, transformers enhance the performance and generalizability of your machine-learning models.

Predictors

These are the final step in your pipeline. They must implement thefitandpredictmethods, or a combinedfit_predictmethod.

I don’t think you want an explanation for predictors.

Benefits of Using Pipelines

- Code Efficiency — No more repetitive code. The pipeline handles the sequence of transformations and predictions seamlessly.

- Readability — Your code is much cleaner and easier to understand.

- Parameter Tuning — Pipelines make it simple to perform hyperparameter tuning using

GridSearchCVwithout data leakage from the test set. - Modularity - Each step in your pipeline can be easily adjusted or replaced.

Pipelines in Action

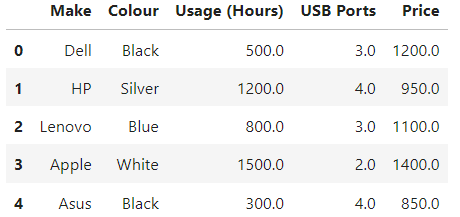

Let’s understand the data set first.

Note: The below dataset is not real. I just generated using ChatGPT to use here.

- Make — Brand of the laptop (e.g., Dell, HP, Lenovo, Apple, Asus, Acer)

- Colour — Color of the laptop (e.g., Black, Silver, Blue, White, Red)

- Usage — Usage in hours (unit: hours)

- USB Ports — Number of USB ports available on the laptop (unit: count)

- Price — Price of the laptop in dollars (unit: dollars)

It’s time to code…

# Standard imports

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

%matplotlib inline

#Scikit-Learn imports

from sklearn.pipeline import Pipeline

from sklearn.impute import SimpleImputer # To fill missing values

from sklearn.preprocessing import OneHotEncoder # To turn our categorical variables into numbers

from sklearn.compose import ColumnTransformer

from sklearn.ensemble import RandomForestRegressor # Our estimator/model

#Import our data set

data = pd.read_csv('./data/laptop_sales.csv') # Replace with your actual pathThis is how our data looks like.

data.head()

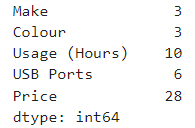

# Check missing values

data.isna().sum()

# Drop the rows with no labels in our target variable

data.dropna(subset=["Price"], inplace=True)

data.isna().sum()

# Split data into X & y

X = data.drop("Price", axis=1)

y = data["Price"]We’ve dropped the rows with no labels and split our data into X and y, let’s create a Pipeline() to fill the rest of the missing values, encode them if necessary (turn them into numbers), and fit a model to them.

Let’s define categorical, ports, and numeric features. Then build transformer

We’ll do the following with the Pipeline() class:

- Categorical transformer — fill our categorical values with the value ‘missing’ and then one encode them.

- Ports transformer — fill the USB Ports column missing values with the value 4.

- A numeric transformer — fill the numeric column missing values with the mean of the rest of the column.

# Define categorical columns

categorical_features = ["Make", "Colour"]

# Create categorical transformer (imputes missing values, then encodes them)

categorical_transformer = Pipeline(steps=[

('imputer', SimpleImputer(strategy='constant', fill_value='missing')),

('onehot', OneHotEncoder(handle_unknown='ignore'))

])

# Define port feature

port_feature = ["USB Ports"]

# Create port transformer (fills all door missing values with 3)

port_transformer = Pipeline(steps=[

('imputer', SimpleImputer(strategy='constant', fill_value=3)),

])

# Define numeric features

numeric_features = ["Usage (Hours)"]

# Create a transformer for filling all missing numeric values with the mean

numeric_transformer = Pipeline(steps=[

('imputer', SimpleImputer(strategy='mean'))

])categorical_features — It specifies the columns in the dataset that contain categorical data, which are "Make" and "Colour".

categorical_transformer — This pipeline consists of two steps

SimpleImputer: It fills missing values in categorical features with a constant value, in this case, 'missing'.OneHotEncoder: It encodes categorical integer features using a one-hot encoding scheme.handle_unknown='ignore'parameter is used to handle any unknown categories encountered during transformation.

port_feature — It specifies the column in the dataset that contains port information, which is "USB Ports"

port_transformer — This pipeline consists of one step

SimpleImputer: It fills missing values in the "USB Ports" column with a constant value, in this case, 3.

numeric_features — It specifies the columns in the dataset that contain numeric data, which is "Usage (Hours)"

numeric_transformer — This pipeline consists of one step

SimpleImputer: It fills missing values in numeric features with the mean of the respective column.

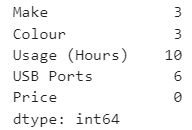

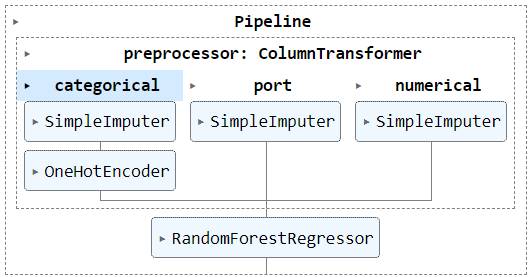

Let’s combine Pipelines with ColumnTransformer()

# Create a column transformer which combines all of the other transformers

preprocessor = ColumnTransformer(

transformers=[

# (name, transformer_to_use, features_to_use transform)

('categorical', categorical_transformer, categorical_features),

('port', port_transformer, port_feature),

('numerical', numeric_transformer, numeric_features)

])This code creates a ColumnTransformer named preprocessor that combines different preprocessing steps for categorical, port, and numeric features. Each step is specified with a name, a corresponding transformer pipeline, and the features it should operate on. This allows for tailored preprocessing treatments on specific columns of the input data. The preprocessor can then be seamlessly integrated into a machine-learning pipeline, ensuring that all necessary transformations are applied consistently before training the model.

Let’s create a Pipeline() to preprocess and model our data with the ColumnTransformer() and RandomForestRegressor().

# Create the preprocessing and modelling pipeline

model = Pipeline(steps=[('preprocessor', preprocessor), # fill our missing data and will make sure it's all numbers

('regressor', RandomForestRegressor())]) # this will model our dataIt utilizes scikit-learn’s Pipeline class to sequentially apply a preprocessing step (preprocessor) followed by a regression model (RandomForestRegressor). The preprocessor object encompasses tasks such as handling missing data and ensuring all features are in numerical format. Once the data is preprocessed, it is passed to the random forest regressor, which builds a model based on the preprocessed data. This pipeline streamlines the process of data preparation and model training, providing a convenient and efficient way to handle both tasks in a coherent manner.

Let's make our training data and test data. Then fit the preprocessing and modelling Pipeline() on the training data.

# Split data into train and teset sets

np.random.seed(42)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

# Fit the model on the training data

# (When fit() is called with a Pipeline(), fit_transform() is used for transformers)

model.fit(X_train, y_train)The below image shows information about our Pipeline

Let’s evalueate the model on test data

model.score(X_test, y_test)I have got 0.9482058333233976 accuracy here. You may get different values based on your random state.

In conclusion, Scikit-Learn pipelines are a powerful tool to streamline your machine learning workflows. They help in keeping your code DRY (Don’t Repeat Yourself), improve readability, and simplify hyperparameter tuning. Whether you’re a beginner or an experienced data scientist, pipelines can significantly enhance your efficiency and model performance. So, next time you’re working on a machine learning project, give Scikit-Learn pipelines a try!