Neural Networks Without the Jargon

Neural networks are a type of machine learning model inspired by the human brain. Just as the brain has interconnected neurons transmitting signals, a neural network has artificial neurons (nodes) connected by weights (knowledge is stored here) processing data and learning patterns.

These models learn from examples rather than being explicitly programmed. By training on lots of data, a neural network can adjust its connections (weights) to improve at tasks like recognizing images or understanding speech.

Neural networks are important because they can efficiently and effectively solve complex problems. They excel at tasks like image recognition and speech recognition, often performing them much faster than humans. For example, a well-tuned neural network can analyze images or audio in minutes, whereas a human expert might take hours.

Neural networks power many everyday technologies. One famous example is Google’s search algorithm, which uses neural network techniques to help find relevant results. In short, neural networks are a key driving force behind modern AI, enabling features from voice assistants to recommendation systems.

History and Evolution

The concept of neural networks dates back to the mid-20th century. In 1943, researchers McCulloch and Pitts proposed a model of artificial neurons that could perform simple logic.

Later in 1958, Frank Rosenblatt introduced the perceptron, an early single-layer neural network that learned to classify inputs into two groups. However, early neural nets were limited in what they could do (It can not solve the XOR problem), and progress slowed in the 1970s (a period often called the first “AI winter”).

In the 1980s, neural networks made a comeback with the development of the backpropagation training algorithm. Pioneers like Geoffrey Hinton, David Rumelhart, and Ronald Williams showed that backpropagation could train multi-layer networks (sometimes called multi-layer perceptrons) effectively, allowing networks to learn more complex patterns

This was a breakthrough. Suddenly, neural networks could have one or more hidden layers and still learn, overcoming the earlier limitations.

Through the 1990s, neural networks started finding real applications (like reading handwritten text or predicting financial trends), but they still faced skepticism due to limited computing power and data. The real explosion came in the 2000s and 2010s with the rise of “deep learning.” Thanks to much larger datasets (e.g., millions of images on the internet) and more powerful processors (especially GPUs), researchers built deeper networks (with many layers) and achieved impressive results. A landmark was in 2012, when a deep convolutional neural network called AlexNet dramatically won an image recognition competition, outperforming all previous methods

This victory cemented neural networks (deep learning) as the state-of-the-art for many tasks. Since then, we’ve seen rapid evolution – from recurrent networks handling language translation to transformer models powering advanced AI like GPT. Neural networks have continually evolved to become more accurate and versatile.

Basic Structure

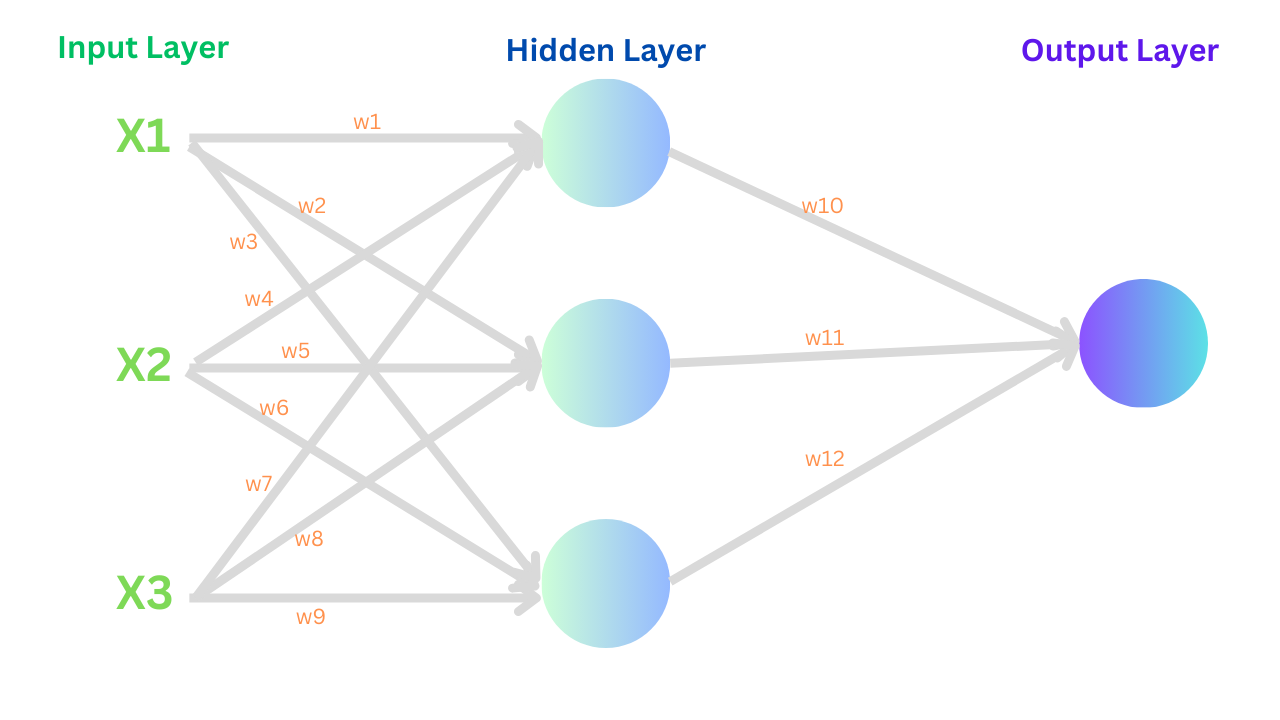

A neural network is structured in layers of neurons. Typically, we have an input layer (which takes in the data), one or more hidden layers (which do computations), and an output layer (which produces the final result).

Each neuron in a layer is connected to neurons in the next layer, and each connection has a weight, a number that indicates the importance of that connection.

Remember that neurons of the input layer are not considered as computational nodes since those neurons don't perform any calculations.

When an input comes into the network, it travels forward through these layers

- Each neuron takes the outputs from the previous layer, multiplies each by a corresponding weight, adds them up (plus a bias term), and then applies an activation function. The activation function introduces non-linearity . common examples are ReLU (which outputs zero for negative inputs and linear for positive inputs) or sigmoid (which squashes the output to between 0 and 1). This process is akin to a neuron “firing” if the combined input is strong enough.

- The result from each neuron is passed to the next layer, and this continues until the output layer produces the network’s prediction.

A simple neural network (figure 2 ) with an input layer, one hidden layer, and an output layer. Each circle represents a neuron, arrows represent weighted connections, and each Xi represents an input. Information flows from the inputs on the left through the hidden neurons to the outputs on the right. Weights are represented by Wi

All the learning in a neural network happens by adjusting the weights (and biases) of these connections. Initially, those weights are set randomly. During training, they are tweaked little by little to make the network’s predictions closer to the truth. Despite the simple operations at each neuron (multiply, sum, activate), a network with many neurons and layers can represent very complex functions. This layered structure allows neural networks to progressively build up understanding. For example, in an image, early layers might detect edges, mid layers detect shapes, and later layers recognize objects.

Types of Neural Networks

There are various neural network architectures designed for different types of data and problems. Here are a few key types:

- Feedforward Neural Network (FNN)

The simplest kind where information moves only forward from input to output (no looping or memory). A multi-layer perceptron (with one or more hidden layers) is a classic feedforward network. These are used for basic tasks where each input is considered independently, like straightforward classification or regression. - Convolutional Neural Network (CNN)

A specialized network mostly used for image data . CNNs introduce convolutional layers that act like filters scanning over the input. This allows the network to learn local patterns like edges or textures that are position-independent. CNNs also often use pooling layers to reduce dimensionality and computation. CNNs have become the standard for image recognition and computer vision, as they are very effective in detecting features in images. They’re used in tasks from identifying faces in photos to powering the vision systems of self-driving cars. - Recurrent Neural Network (RNN)

A network designed for sequence data (like sentences, time series, or any data where order matters). RNNs have connections that loop back, allowing information to persist from one step of the sequence to the next. This gives them a kind of memory. For example, an RNN parsing a sentence can carry the context of earlier words as it reads later words. Variants like LSTM (Long Short-Term Memory) and Transformers improve on basic RNNs by handling long-range dependencies better (preventing the network from “forgetting” things too quickly). RNNs and their variants are widely used in language translation, speech recognition, and any sequential data task. - Generative Adversarial Network (GAN)

This architecture pairs two networks against each other: a generator and a discriminator. The generator tries to create fake data (for example, generating realistic-looking images), while the discriminator tries to tell the fakes from real data. They both improve through this rivalry. GANs can create new data resembling the training data, such as generating photorealistic images of people who don’t exist. They’re famous for applications like deepfake videos, image upscaling, and AI-generated art. - Transformer

A more recent architecture that has revolutionized natural language processing. Unlike RNNs, Transformers do not require data to be processed in sequence order. Instead, they use an attention mechanism to weigh the relevance of different parts of the input simultaneously. This architecture proved extremely effective for language tasks. Transformers are the backbone of advanced language models (like BERT or GPT), enabling large language models that can generate human-like text. They have also been applied to images and other domains, making them a very general and powerful neural network architecture.

These are just a few examples. There are other types and hybrids (like autoencoders for data compression, graph neural networks for network-structured data, etc.), but the ones above cover the most common and influential architectures.

How Neural Networks Learn

Neural networks learn through a process of trial and error guided by data. The typical learning procedure is as follows

- Forward pass: We feed input data into the network and get an output (the network’s prediction).

- Calculate loss: We compare the prediction to the true answer using a loss function. The loss is a measure of error. For example, how far off the predicted value is or how incorrect the predicted class probabilities are.

- Backpropagation (Gradient calculation): The network then figures out how to adjust itself by seeing how the error changes with respect to each weight. Using calculus (the chain rule), it computes the gradient of the loss with respect to each weight, essentially determining how much each weight contributed to the error.

- Weight update: The weights are then adjusted slightly in the direction that reduces the loss. This is usually done with an optimization algorithm like stochastic gradient descent (SGD) or a similar method, which moves each weight a small step (determined by a learning rate) in the direction that lowers the error.

This forward and backward cycle repeats for many iterations (over many training examples). Over time, the network’s weights tune themselves to minimize the loss, meaning the network’s predictions get closer to the correct outputs. This process is known as training the neural network.

For example, if a network initially predicts an image of a cat as a dog, the training process will adjust the network’s weights so that it becomes more likely to label that image as a cat next time. With enough training examples, the network gradually improves its accuracy. In essence, the network learns from its mistakes. The calculated error flows backward through the network (backpropagation) and nudges the weights in a better direction.

One challenge during learning is overfitting – when a network memorizes the training data too closely and performs poorly on new data. To guard against this, practitioners use techniques like dropout (randomly dropping some neurons during training to force the network to generalize) and they evaluate the model on separate validation/test data. These practices ensure the neural network not only learns the training examples but also captures patterns that generalize to unseen data.

Advantages and Challenges

Advantages

Neural networks are extremely powerful function approximators. They can capture complex, non-linear relationships in data that simpler models might miss. Neural networks have achieved record-breaking accuracy on a range of problems, from image classification to speech recognition

Another advantage is their ability to automatically learn features from raw data. In older machine learning, a lot of work went into manually crafting features (for example, edge detectors for images). Neural networks learn those internally. Moreover, neural nets tend to improve as you feed them more data. Having lots of data is actually an advantage, whereas traditional algorithms might plateau, and neural nets often keep getting better (up to a point). In practical applications, neural networks can handle large-scale data and find patterns that humans might not notice, making them invaluable for tasks like medical image analysis or anomaly detection in thousands of sensor readings.

Challenges

Despite their power, neural networks have downsides. They usually need a very large amount of data to train effectively. Training data has to be representative. If it’s biased or insufficient, the network’s performance will suffer. Neural networks are also computationally intensive. Training a deep network can be time consuming and may require specialized hardware like GPUs. Another well known issue is that neural networks are often a “black box.” It’s not easy to interpret why a trained network made a specific decision since the knowledge is encoded in hundreds of thousands (or millions) of weights across many layers. This lack of transparency is problematic in areas where explanations are needed (for instance, in healthcare or finance decisions). Efforts in explainable AI aim to address this by developing methods to peek into what a network focuses on or how it arrives at decisions

Neural networks also have many hyperparameters (architecture choices, learning rates, etc.) that can be tricky to tune. Getting the best performance often requires experimentation and experience. Lastly, if not carefully managed, neural nets can overfit or behave unpredictably when encountering data very different from what they saw in training. Deploying them in real-world systems thus requires careful validation and sometimes ongoing monitoring.

Real World Applications

Neural networks are employed across many industries and tasks today. Here are some notable examples.

- Computer Vision

Neural nets (especially CNNs) are widely used for tasks like facial recognition, object detection, and medical image analysis. For example, they help self-driving cars “see” the road by identifying pedestrians, other vehicles, and traffic signs from camera images. - Natural Language Processing

Neural networks power voice assistants (speech recognition and voice synthesis), machine translation (e.g. translating text between languages), and text analysis. They also filter spam emails and even help with writing suggestions (like auto-complete on your phone). Large language model chatbots are neural networks trained on massive text datasets to generate human like responses. - Healthcare

Neural networks have quietly become the unsung heroes in hospitals and labs around the world. Picture a convolutional neural network (CNN) scrolling through your latest X‑ray or MRI, catching tiny tumors or hairline fractures that even seasoned radiologists might miss on a long shift. Beyond spotting problems, these algorithms learn from mountains of past patient records to predict who might end up back in the hospital and give doctors a heads‑up before things spiral. And when it comes to discovering new drugs? What once took researchers years of trial and error, neural nets can now forecast how different molecules behave in a matter of seconds, speeding up breakthroughs and bringing potential treatments into reach far faster than ever before. - Finance

Banks and financial institutions use neural networks for tasks like fraud detection, and monitoring transactions to flag unusual patterns that could indicate credit card fraud. Neural nets also drive algorithmic trading and stock market prediction models by finding subtle patterns in historical market data. Additionally, they are used for credit scoring and risk assessment, learning from large datasets of financial behavior. - Recommender Systems

When Netflix suggests a TV show you might like or Amazon recommends a product, neural networks are often at work. These systems learn your preferences by analyzing your past behavior (and data from other users with similar tastes) to predict what you’ll enjoy next. This personalization is powered by neural network models that excel at detecting complex patterns in user data.

These examples barely scratch the surface. Neural networks also appear in customer service (AI chatbots handling support queries), cybersecurity (intrusion detection systems), creative arts (generating music or artwork), and many other areas. The common theme is that wherever there is complex data and a need to identify patterns or make predictions, neural networks have become a go-to solution.

Future Trends

The field of neural networks is still rapidly evolving. One major focus area is explainability and fairness by making neural networks more transparent and ensuring they make unbiased decisions. Techniques in explainable AI aim to shed light on why a model made a prediction (for example, by highlighting important features), which is crucial for trust in applications like healthcare or finance. There’s also a growing emphasis on ethics and privacy, so future AI systems will likely incorporate mechanisms to prevent biased outcomes and protect sensitive data.

Another trend is improving efficiency and accessibility of neural networks. Researchers are creating smaller, optimized models that can run on everyday devices (smartphones, IoT gadgets) by enabling AI at the edge without needing a powerful cloud server.

This means features like real-time translation or image recognition can happen locally, with less lag and more privacy. We’re also seeing progress in automated machine learning (AutoML), which helps design and tune neural networks with minimal human intervention. In the future, even non-experts might train effective neural nets by leveraging AutoML tools.

Overall, neural networks are becoming more integrated and ubiquitous. They are getting better at handling multiple types of data together (for example, models that understand both images and text), and very large general-purpose models continue to push the envelope of what AI can do. We can expect neural networks to be increasingly present in daily technology, more user-friendly to develop, and more trustworthy and efficient in their operation.

Conclusion

Neural networks have come a long way from their humble beginnings. Today, they are at the heart of many AI breakthroughs, enabling computers to see, hear, and make decisions in ways not possible before. In this article, we explained neural networks in simple terms: they are layered networks of artificial neurons that learn from data by adjusting connection weights. We covered a bit of their history (from the perceptron to the deep learning revolution), their basic structure (neurons, layers, and activations), and some common types like CNNs, RNNs, GANs, and Transformers. We also discussed how they learn (through backpropagation and iterative training) and weighed their advantages (powerful learning capability, automatic feature learning) against challenges (data hunger, computational cost, black-box nature).

Neural networks are transforming industries and everyday life, from improving medical diagnoses to personalizing your news feed. And the field keeps evolving, with efforts to make models more efficient, understandable, and broadly capable. As a beginner, diving into neural networks can be initially overwhelming, but it’s also exciting and rewarding. With accessible libraries and a huge community, you can start building simple neural models and gradually explore more complex ones.

In summary, neural networks are a foundational technology in modern AI that will likely continue to grow in importance. They mimic a bit of how brains work to enable machines to learn from experience. With this overview, you have a solid foundation to explore further and even experiment with neural networks yourself. The world of AI is moving fast and neural networks are leading the charge, one layer at a time.