Classification Report Explained — Precision, Recall, Accuracy, Macro average, and Weighted Average

Why do we need a classification report?

Why do we need a classification report?

When we’re using a classification model to predict things like whether an email is spam or not, we need to know how well it’s doing. That’s where a classification report comes in handy. It gives us a detailed summary of how our model is performing.

Think of it like getting a report card in school. Just like a report card tells you how well you’re doing in different subjects, a classification report tells us how well our model is doing in different aspects of classification.

In the classification report, we can see things like accuracy, which tells us overall how often our model is correct. We also see precision, recall, and F1 Score, which give us insights into how well our model is doing at correctly identifying different classes (like spam and non-spam emails).

By looking at these metrics in the classification report, we can understand if our model is doing a good job on new data that it hasn’t seen before. It’s like checking our model’s performance to make sure it’s ready to tackle real-world tasks effectively.

Rather than the classification report, we have bunch of ways to evaluate the classification models. Ex — Accuracy, ROC and AUC curves, Confusion matrix, and more. If you want to learn more, follow the documentation from Scikit-Learn.

Let’s talk about the classification report

You can take this report by using this code segment

from sklearn.metrics import classification_report

print(classification_report(y_test,y_preds))y_test stands for actual outputs of test data (X_test data)

y_preds stands for the model’s predictions on X_test data (Unseen data)

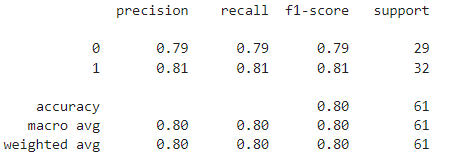

Here 0 may be the Negative class (Ex — Not a spam email), 1 may be a positive class (Ex — a Spam email). Let’s assume as I mentioned.

Before moving forward you need to know these things

- True Positives (TP) — The instances where the model correctly predicts the positive class (Spam email) as positive.

- False Positives (FP) - The instances where the model incorrectly predicts the negative class (Not a spam email) as positive.

- True Negatives (TN) - The instances where the model correctly predicts the negative class as negative.

- False Negatives (FN) - The instances where the model incorrectly predicts the positive class as negative

Let’s dive into the features of the classification report.

Precision

Precision measures how many of the emails your model predicted as spam were actually spam (TP), compared to all the emails your model said were spam (TP + FP), whether they were right or wrong

We can calculate precision using TP / ( TP + FP )

So, if your precision is 0.8, it means that out of all the emails your model classified as spam, 80% of them were actually spam. In other words, it’s like saying, “When my model said an email was spam, it was correct 80% of the time.”

Recall (TPR — True Positive Rate)

Recall measures how many of the emails your model correctly identified as spam (TP) compared to all the spam emails that were actually there (TP + FN).

We can calculate recall using TP / ( TP + FN )

So, if your recall is 0.8, it means that out of all the spam emails that existed, your model managed to identify 80% of them. In simpler terms, it’s like saying, “My model caught 80% of all the spam emails that were sent.”

F1 Score

It is the Harmonic mean of precision and recall. The F1 Score measures how well our model balances between correctly identifying spam emails (precision) and catching all the spam emails that were actually there (recall)

Harmonic mean = 2 × precision × recall / ( precision + recall )

So, if your F1 Score is high, it means your model has found a good balance between precision and recall. It’s like saying, “My model is doing a great job at both correctly classifying spam emails and capturing all the spam that’s out there.” But if the F1 Score is low, it indicates that there’s an imbalance between precision and recall, suggesting that the model may be favoring one over the other which is not good.

Accuracy

Accuracy measures how many emails your model classified correctly, whether they were spam or not, compared to all the emails it looked at.

So, if your accuracy is high, it means that a large portion of the emails your model looked at were classified correctly. It’s like saying, “My model got it right most of the time.” However, if accuracy is low, it means your model may be making more mistakes, missing the target more often.

Support

The number of data that each metric was calculated on.

Macro Average

Simply the average precision, recall and f1 score between classes. Macro average doesn't take class imbalance into the calculation. It treats all class equally. So if you do have class imbalance, pay attention to this metric.

What is meant by class imbalance?

Class imbalance occurs when one class in a dataset has significantly more instances than another class. Let’s illustrate this with an example:

Imagine you’re working on a project to detect fraudulent transactions in a banking dataset. In this dataset, most transactions are legitimate (non-fraudulent), but there’s a small percentage of transactions that are fraudulent.

Let’s say out of 1000 transactions:

- 950 transactions are legitimate (non-fraudulent).

- 50 transactions are fraudulent.

In this scenario, we have a class imbalance because the number of legitimate transactions far outweighs the number of fraudulent transactions.

Class imbalance can pose challenges for machine learning models because they tend to learn from the majority class (legitimate transactions) more effectively, potentially ignoring the minority class (fraudulent transactions). As a result, the model may perform poorly in identifying the minority class, which is often the class of interest (fraudulent transactions in this case).

Dealing with class imbalance requires special attention, such as using techniques like resampling (oversampling or undersampling), adjusting class weights, or using algorithms specifically designed to handle imbalanced datasets. These strategies help ensure that the model learns effectively from both classes and achieves better performance in detecting the minority class.

Let’s focus on where we left

In our context, let’s say we have different classes in our dataset, like spam and non-spam emails. The macro average calculates the average performance across all classes by considering each class equally.

So, if you calculate the macro average for metrics like precision, recall, or F1 Score, it means you’re looking at the average performance for each class separately and then averaging them together. It’s like saying, “Let’s see how well our model performed for each category, and then find the average performance across all categories.”

Weighted average

Weighted average is like giving more importance to some opinions in a group discussion based on their expertise. Imagine you’re having a discussion with your friends about which movie to watch. You know some friends are movie buffs and have better judgment, so you might give more weight to their opinions when making the final decision.

In our context, when we talk about metrics like precision, recall, or F1 Score, weighted average considers the performance of each class but gives more weight to classes with more samples. This means that the performance of larger classes has a bigger impact on the overall average.

So, when you calculate the weighted average, you’re essentially saying, “Let’s consider the performance of each class, but let’s give more importance to the classes that have more data.” It helps provide a more balanced evaluation of the model’s performance across different classes, taking into account the varying sizes of those classes.

I hope you understood the basics of the classification report. If you found this useful, follow me for future articles. It motivates me to write more for you.

Follow me on Medium

Follow me on LinkedIn